We shall now present several classical engineering issues on illustrative examples. These issues are indeed representative of the typical problems addressed by systems architecting. We classified them in two categories: on one hand, product problems, referring to purely architectural flaws leading to a bad design of the product, and on the other hand, project problems, referring to organizational issues leading to a bad functioning of the project. An overview of these different problems is presented in Table 13 below. The details of our examples & analyzes will be found in the sequel of this appendix.

- Product problems

- Product problem 1 – The product system model does not capture reality

- Typical issue: the system design is based on a model which does not match with reality

- Example: the failure of Calcutta subway

- Product problem 2 – The product system has undesirable emergent properties

- Typical issue: a complex integrated system has unexpected and/or undesired emerging properties, coming from a local problem that has global consequences

- Example: the explosion of Ariane 5 satellite launcher during its first flight

- Product problem 1 – The product system model does not capture reality

- Project problems

- Project problem 1 – The project system has integration issues

- Typical issue: the engineering of the system is not done in a collaborative way

- Example: the huge delays of the Airbus A380 project

- Project problem 2 – The project system diverts the product mission

- Typical issue: the project forgets the mission of the product

- Example: the failure of the luggage management system of Denver airport

- Project problem 1 – The project system has integration issues

Table 13 – Examples of typical product and project issues addressed by systems architecting

B.1 Product problem 1 – The product system model does not capture reality

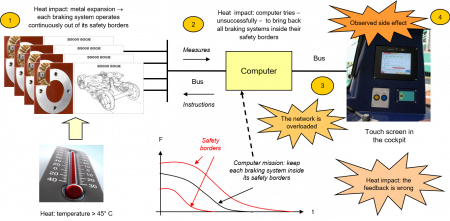

To illustrate that first product architecture issue, we will consider the Calcutta subway case1This case is not public. We were thus obliged to hide its real location and to simplify its presentation, without however altering its nature and its systems architecting fundamentals. which occurred when a very strong heat wave (45°C in the shadow) stroke India during summer time. The cockpit touch screens of the subway trains became then completely blank and the subway drivers were therefore not able anymore to pilot anything. As a consequence, the subway company stopped working during a few days, which lead moreover to a huge chaos in the city and to important financial penalties for the subway constructing company, until the temperature came back to normal, when it was possible to operate again the subways as usually.

To understand what happened, the subway designers tested immediately the touch screens, but these components worked fine under high temperature conditions. It took then several months to understand the complete chain of events that lead to the observed dysfunctioning, which was – quite surprisingly for the engineers who made the analysis – of systemic nature as we will now see. The analysis indeed revealed that the starting point of the problem were the bogies, that is to say the mechanical structure which is carrying the subway wheels. Each subway wagon is supported by two bogies, each of them with four wheels. The important point is that all these bogies were basically only made with metal. This metal expanded under the action of high heat, leading to an unexpected behavior of the bogies that we are now in position to explain.

One must indeed also know that to each bogie is attached a braking system. These braking systems are in particular regulated by the central subway computer where a control law was embedded. The control law obliges each local braking system on each bogie to exert a braking force which shall be between two safety lower and upper borders2Braking forces can indeed not be neither too strong (in order to avoid wheels destroying rails), nor too weak (in order to avoid wheel slip which will result in no braking at all)., when braking is initiated. The role of the central computer is thus to ensure that the two safety borders are always maintained during braking, which is achieved by relaxing or increasing the braking force on a given braking system. The key point was that the underlying control law was not valid at high temperature. This control law was indeed designed – and quite robust in that case – in a Western environment where strong heat never occurs. Hence nobody knew that it was not correct anymore in such a situation.

Figure 62 – The Calcutta subway case

What happened can now be easily explained. The high temperature indeed provoked the same metal expansion among the different subway bogies. Hence all bogies were continuously working out of their safety borders during braking. But the computer was not aware of that situation and continued to try to bring back all braking systems inside their safety borders, applying its fixed control law that was unfortunately false in this new context. As a consequence, there was a permanent exchange of messages between the central computer and the numerous braking systems along the subway. It resulted in an overload of the network which was dimensioned to support such a heavy traffic. The observed effect on the touch screen was thus just a side effect of this overload, due to the fact that the touch screen is also connected to the central computer by the same network, which explains why nothing wrong was found at the touch screen level.

We can thus see that this case highlights a typical modeling problem, here the fact that the braking control law was false in the Indian high temperature context, but also an integration issue3In that case, all components are indeed working well individually. The problem is a subway system level problem that cannot be found in one single component, but rather in the bad integration of all involved components. , that ultimately led to an operational failure through a “domino” effect4Another classical example of a domino effect is provided by O. de Weck [28] who described the collapse of the redesign of the US F/A-18 aircraft fighter for the Swiss army. This aircraft was initially designed in 1978 for the US Navy as a carrierbased fighter and attacker, with 3,000 flight hour’s expectation, missions with average duration of 90 minutes and maximal acceleration of 7.5 g and 15 years of useful life. As a consequence of the neutral policy, inland, small size and mountainous nature of Switzerland, this country wanted a based-land interceptor aircraft, with 5,000 flight hour’s expectation, missions with average duration of 40 minutes and maximal acceleration of 9.0 g and 30 years of useful life. Engineers analyzed that it was sufficient to change some non-robust fatigue components near the engine, made in Aluminum, in order to meet Swiss requirements. These components were then redesigned in Titanium. Unfortunately a shift of the center of gravity of the aircraft was then created and one needed to reinforce the fuselage to solve that issue. This other change lead to transversal vibrations that required other reinforcements of weights and modifications in the flight control system. Many changes continued to propagate within the aircraft up to impacting the industrial processes and the organization of the construction factory. 500 grams changes lead thus finally to 10 million dollars modifications that were never expected. , where an initial local problem progressively propagated along the subway and resulted in a global breakdown of the system. One shall thus remember that it is a key good practice to permanently check and ensure the consistency between a system model and the reality it models, since reality will indeed always be stronger than any model, as illustrated by the Calcutta subway case.

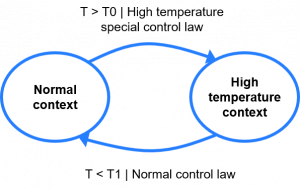

Note finally that this kind of modeling problem is typically addressed by systems architecting, which proposes an answer through “operational architecting” (we refer to both Chapter 3 and Chapter 4 for more details). Such an analysis indeed focuses on the understanding of the environment of the concerned system. In the Calcutta subway, a typical operational analysis would have consisting in considering India as a key stakeholder of the subway and then trying to understand what is different in India, compared to the Western countries where the subway was initially developed. It is then easy to find that very strong heat waves do statistically occur in India each decade which creates an Indian specific “High temperature context” that shall be specifically analyzed. A good operational systems architecting analysis shall then be able to derive the braking system lifecycle, presented in Figure 63, with two states that are respectively modeling normal and high temperature contexts and two transitions that do model the events5Here the fact that the temperature is higher (resp. lower) that some threshold T0 (resp. T1) when the braking system is in “Normal” context (resp. “High temperature” context). that create a change of state and of braking control law.

Figure 63 – The missing operational analysis in the Calcutta subway case

Such a diagram is typically an (operational) system model. It looks apparently very simple6This simplicity is unfortunately an issue for systems architecting. Most of people will indeed agree to the fact that one cannot manipulate partial differential equations without the suitable studies in applied mathematics. But the same people will surely think that no specific competency is required to write down simple operational models such as the one provided by Figure 63, which is unfortunately not the case. We indeed do believe that only good systems architects can achieve such an apparently simple result, which will always be the consequence of a good combination of training and personal skills., but one must understand that introducing the “High temperature” context and the transition that leads to that state will allow avoiding a stupid operational issue and saving millions of euros…

B.2 Product problem 2 – The product system has undesirable emergent properties

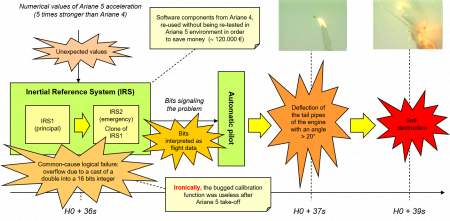

The second product-oriented case study that we will discuss is the explosion of the very first satellite launcher Ariane 5 which is well known due to the remarkable work of the Lions commission, who published a public detailed and fully transparent report on this accident (see [56]). This case was largely discussed in the engineering literature, but its main conclusions were rather focused on how to better master critical real-time software design. We will here present a systems architecting interpretation of that case, which, in the best of our knowledge, was never made up to now.

Let us now remember what happened on June 4, 1996 for the first flight of Ariane 5. First of all, the flight of this satellite launcher was perfect from second 0 up to second 36 after take-off. At second 36.7, there was however a simultaneous failure of the two inertial systems of the launcher that lead at second 37 to the activation of the automatic pilot which misunderstood the error data transmitted by the inertial systems. The automatic pilot corrected then brutally the trajectory of Ariane 5, leading to a mechanical brake of the boosters and thus to the initiation of the self-destruction procedure of the launcher that exploded at second 39.

Figure 64 – The Ariane 5 case

As one can easily guess, the cost of this accident was tremendously high and probably reached around 1 billion euros. One knows that the direct cost due to the satellite load lost was around 370 million euros. But there was also an induced cost for recovering the most dangerous fragments of the launcher (such as the fuel stock) that crashed in the (quite difficult to access) Guyana swamps, which tool one month of work. Moreover there were huge indirect costs due to Ariane 5 program delaying: the second flight was only performed one year later and it took three more years to perform the first commercial flight of the launcher, by December 10, 1999.

As already stated, the reason of that tragic accident is fortunately completely analyzed through the Lions commission report (cf. [56]). The origin of the accident could indeed be traced back to the reuse of the inertial reference system (IRS)7 An inertial reference system is a software based system that continuously calculates the position, orientation and velocity of a moving object. In the context of Ariane 5, it is thus a critical since most of the other systems depend on its calculations. of Ariane 4. This critical complex software component perfectly worked on Ariane 4 and it was thus identically reused on Ariane 5 without being retested8The engineer in charge of the IRS proposed to retest it in the new Ariane 5 environment, but it was decided not to follow that proposal in order to save around 120.000 euros of testing costs. in the new environment. Unfortunately Ariane 5 was a much powerful launcher than Ariane 4 and the numerical values of Ariane 5 acceleration – which are the inputs of the inertial reference system – were five times bigger than for Ariane 4. These values were thus coded in double precision in the context of Ariane 5, when the inertial reference system was designed to only accept single precision integers as inputs. As a consequence, due to the fact that this software system was coded in C9The C programming language is very permissive and does not provide automatic type control. Contrarily a C program will always convert any input value into the type that it manipulates. In the Ariane 5 context, the inertial reference system thus converted automatically all double integer inputs into single integers, according to standard C language rules. This purely syntactic type conversion destroyed the physical meaning of the involved data, leading thus to the observed overflow. , an overflow occurred during its execution. The error codes resulting from that software error were then unfortunately interpreted as flight data by the automatic pilot of Ariane 5, which corrected – one second after receiving these error codes – the trajectory of the launcher from an angle of more than 20°, resulting quite immediately in the mechanical breaking of the launcher boosters and one second after, in the initiation of the self-destruction procedure.

The Ariane 5 explosion is hence a typical integration issue10Moreover they were also a number of software engineering mistakes – presented below – that also illustrate the fact that the hardware-software integrated nature of the launcher was not really taken into account by the designers. Issue 1 – software specificity misunderstanding: only physical (which are statistical) component failures were considered, but logical (which are systematic) component failures are of totally different nature. Note that this kind of software failure can only be addressed by formal model checking or dissimilar redundancy strategy (which consists in developing two different versions of the software component by two different teams on the basis of the same specification in order to ensure a different distribution of bugs within the two versions); issue 2 – poor software documentation: the conditions for a correct behavior of the IRS module were not explicitly documented in the source code; issue 3 – poor software architecture: the raise of a local exception in a software component shall normally never imply its global failure.. All its components worked individually perfectly, but without working correctly altogether when integrated. Hence one shall remember that a component of an integrated system is never correct by itself. It is only correct relatively to the set of its interfaced components. When this set evolves, one must thus check that the target component is still properly integrated with its environment, since the fact that the IRS module fulfilled Ariane 4 requirements cannot ensure it fulfils Ariane 5 requirements.

This example also shows that an – usually not researched – emergent property of integration can be death. The Ariane 5 system was indeed incorrect by design since the launcher could only explode as it was integrated. In other words, Ariane 5 destruction was embedded in its architecture and it can be seen as a purely logical consequence11The IRS component of Ariane 5 consisted in two exactly similar software modules (which, as explained in the previous footnote, was of no use due to the logical nature of the failure that repeated similarly in each of these modules). The 36 seconds delay that separated take-off from the crash of the IRS can thus be decomposed in two times 18 seconds that are necessary for each module to logically crash. As a consequence, we can typically state the following architectural “theorem” which illustrates the “incorrection” by design of Ariane 5. Theorem: Let us suppose that the IRS component of Ariane 5 has N similar software modules. The launcher shall then be destroyed at second 18*N+3. of its integration mode. This extremal – and fortunately rare – case illustrates thus well the real difficulty of mastering integration of complex systems!

Note finally that systems architecting can provide a number of methodological tools to avoid such integration issues. Among them, one can typically cite interface or impact analyses. In the Ariane 5 context, a simple interface type check would for instance allowed seeing that the input types of the inertial reference system were simply not compatible with the expected ones, which would probably permit avoiding a huge disaster!

B.3 Project problem 1 – The project system has integration issues

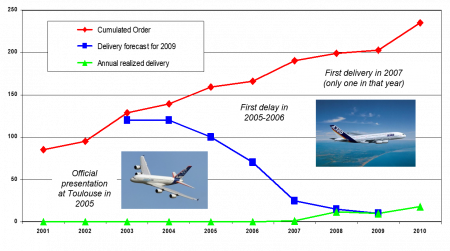

Our first “project architecture” issue is the initial Airbus 380 delivery delay, since it is mainly public (see [82] for an extensive presentation of that case study). Let us recall that this aircraft is currently the world’s largest passenger airliner. Its origin goes back in mid-1988 when Airbus engineers began to work in secret on an ultra-high-capacity airplane in order to break the dominance that Boeing had on that market segment since the early 1970s with its 747. It took however a number of years of studies to arrive to the official decision of announcing in June 1994 the creation of the A3XX program which was the first name of the A380 within Airbus. Due to the evolution of the aeronautic market that darkened in that moment of time, it is interesting to observe that Airbus decided then to refine its design, targeting a 15–20 % reduction in operating costs over the existing Boeing 747 family. The A3XX design finally converged on a double-decker layout that provided more passenger volume than a traditional single-deck design, perfectly in line with traditional hub-and-spoke theory as opposed to point-to-point theory that was the Boeing paradigm for large airliners, after conducting an extensive market analysis with over 200 focus groups.

In the beginning of 2000, the commercial history of the A380 – the new name that was then given to the A3XX – began and the first orders arrived to Airbus by 2001. The industrial organization was then put in place between 2002 and 2005: the A380 components are indeed provided by suppliers from all around the world, when the main structural sections of the airliner are built in France, Germany, Spain, and the United Kingdom, for a final assembly in Toulouse in a dedicated integration location. The first fully assembled A380 was thus unveiled in Toulouse by 18 January 2005 before its first flight on 27 April 2005. By 10 January 2006, it flew to Colombia, accomplishing both the transatlantic test, and the testing of the engine operation in high-altitude airports. It also arrived in North America on 6 February 2006, landing in Iqaluit, Nunavut in Canada for cold-weather testing. On 4 September 2006, the first full passenger-carrying flight test took place. Finally Airbus obtained the first A380 flight certificates from the EASA and FAA on 12 December 2006.

During all that period, orders continued to arrive from the airline companies, up to reaching a bit less than 200 cumulated orders, obtained in 2007. The first deliveries were initially – in 2003 – planned for end 2006, with an objective of producing around 120 aircrafts for 2009. Unfortunately many industrial difficulties – that we will discuss below – occurred and it was thus necessary to re-estimate sharply downward these figures each year12Airbus announced the first delay in June 2005 and notified airlines that deliveries would be delayed by six months. This reduced the total number of planned deliveries by the end of 2009 from about 120 to 90–100. On 13 June 2006, Airbus announced a second delay, with the delivery schedule slipping an additional six to seven months. Although the first delivery

was still planned before the end of 2006, deliveries in 2007 would drop to only 9 aircraft, and deliveries by the end of 2009 would be cut to 70–80 aircraft. The announcement caused a 26 % drop in the share price of Airbus’ parent, EADS, and led to the departure of EADS CEO, Airbus CEO, and A380 program manager. On 3 October 2006, upon completion of a review of the A380 program, Airbus new CEO announced a third delay, pushing the first delivery to October 2007, to be followed by 13 deliveries in 2008, 25 in 2009, and the full production rate of 45 aircraft per year in 2010. (cf. Figure 65). The very first commercial A380 was finally produced by end 2007 and instead of 120, only 23 airliners were delivered in 2009.

Figure 65 – The Airbus 380 case

These delays had strong financial consequences, since they increased the earnings shortfall projected by Airbus through 2010 to € 4.8 billion. It is thus clearly interesting to try to better understand the root causes of such an important failure.

The source of these delays seems to be connected to the incoherence of the 530 km (330 miles) long electrical wiring, produced in France and Germany. Airbus cited in particular as underlying causes the complexity of the cabin wiring (98,000 wires and 40,000 connectors), its concurrent design and production, the high degree of customization for each airline company, and failures in configuration management and change control. These electrical wiring incoherencies were indeed only discovered at the final integration stage in Toulouse13There exists a video where one can see a poor technician in Toulouse that is unable to connect two electrical wires coming from two different sections of the aircraft, due to a lack of 20 centimeters of wire. , which was of course much too late…

The origin of this problem could be traced back to the fact that German and Spanish Airbus facilities continued to use CATIA® version 4, while British and French sites migrated to version 5. This caused overall configuration management problems, at least in part because wire harnesses manufactured using aluminum, rather than copper, conductors necessitated special design rules including nonstandard dimensions and bend radii. This specific information was not easily transferred between versions of the software, which lead to incoherent manufacturing and at the very end, created the integration issue in Toulouse. On a totally different dimension, the strong customization of internal equipment also induced a long learning curve for the teams and thus other delays. Independently of these “official” causes, there are other plausible deep causes coming from cultural conflicts among the dual-headed French & German direction of Airbus and lack – or break – of communication between the multi-localized teams of the European aircraft manufacturer.

As systems architects, we may summarize such problems as typical “project architecture” issues. The issues finally observed at product level are indeed only consequences of lack of integration within the project, that is to say project interfaces – to use a system vocabulary – that were not coherent, which simply refer, in more familiar terms, to project teams or project tools that were not working coherently altogether. It is thus key to have a robust project architecture in the context of complex systems development since the project system is always at least as complex as the product system it is developing. Unfortunately it is a matter of fact that the energy spent with technical issues is usually much more equivalent than the energy spent on organizational issues, which often ultimately lead to obviously bad project architectures in complex systems contexts, resulting at the very end into bad technical architectures in such contexts.

B.4 Project problem 2 – The project system diverts the product mission

As a last example of different types of project issues, we will now consider the case of the Denver airport luggage management system failure, which is fortunately well known due to the fact that it is completely public (see for instance [27] or [72]).

Denver airport is currently the largest airport in the United States in terms of total land area and the 6 th airport in the United States (the 18th in the world) in terms of passenger traffic. It was designed in order to be one of the main hub for United Airlines and the main hub for two local airlines. The airport construction officially started in September 1989 and it was initially scheduled to open on October 29, 1993. Due to the very large distance between the three terminals of the airport and the need of fast aircraft rotations for answering to its hub mission, the idea of automating the luggage management emerged in order to provide quick plane inter-connections to travelers. United Airlines was the promoter of such a system which was already implemented in Atlanta airport, one of their other hubs. Since Denver airport was intended to be much wider, the idea transformed in using the opportunity of Denver’s new airport construction to improve Atlanta’s system in order to create the most efficient & innovative luggage management system in the world14The idea was to have a fully-automated luggage transportation system, with new hardware & software technologies, that was able to manage very large volumes of luggage.. It was indeed expected to have 27 kilometers of transportation tracks, with 9 kilometers of interchange zones, on which were circulating 4.000 remote-controlled wagons at a constant transportation speed of 38 km/h for an average transportation delay of 10 minutes, which was completely unique.

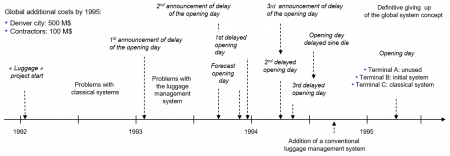

The luggage management system project started in January 1992, a bit less than 2 years before the expected opening of the airport. During one year, the difficulties of this specific project were hidden since there were many other problems with more classical systems. However at beginning 1993, it became clear that the luggage management system could not delivered at schedule and Denver’s major was obliged to push back the opening date, first to December 1993, then to March 1994 and finally again, to May 15, 1994.

Unfortunately the new automated luggage management system continued to have strong problems. In April 1994, the city invited reporters to observe the first operational test of the new automated baggage system saw instead disastrous scenes where this new system was just destroying luggage, opening and crashing their contents before them. The major was then obliged to cancel sine die the opening date of the airport. As one can imagine, no airline – excepted United – wanted to use the new “fantastic” system, which obliged to abandon the idea of a global luggage management system for the whole airport. When the airport finally opened in February 28, 1995, thus only United Airlines terminal was equipped with the new luggage management system, when the other terminal15It was initially not possible to open the last airport terminal due to the time required for changing the already constructed automatic luggage management system to a standard manual one. that also opened was simply equipped with totally classical systems (that is to say tugs and carts).

In 1995, the direct additional costs due to this failure were of around 600 M$, leading to more than one billion dollars over cost at the very end. Moreover the new baggage system continued to be a maintenance hassle16Its nickname quickly became the “luggage system of hell”!. It was finally terminated by United Airlines in September 2005 and replaced by traditional handlers manually handling cargo and passenger luggage. A TV reporter who covered the full story concluded quite interestingly17 That one must always remember that a system can be formed of people only doing manual operations. In most of cases, good systems architectures are hybrid with both automatic and manual parts. In Denver’s case, the best solution was however purely manual, which United Airlines took ten years to understand. that “it took ten years, and tons of money, to figure out that big muscle, not computers, can best move baggage”.

Figure 66 – The Denver luggage management system case

When one looks back to that case, it is quite easy to understand why this new automatic luggage system collapsed. There were first too many innovations18 As a matter of fact, it is interesting to know that Marcel Dassault, the famous French aircraft engineer, was refusing all projects with more than one innovation! A strategy that worked quite well for him. It was indeed both the first global automated system, the first automatic system that was managing oversize luggage (skis!), the first system where carriages did not stop during their service19 Suitcases were automatically thrown with a catapult… which easily explains the high ratio of crashes., the first system supported by a computer network and the first system with a fleet of radio-localized carriages. There was also an underlying huge increasing of the complexity: compared to the similar existing Atlanta system, the new luggage management system was 10 times faster, had 14 times the maximal known capacity and managed 10 times more destinations. Moreover the project schedule was totally irrelevant with respect to the state-of-the-art: due to the strong delay pressure, no physical model and no preliminary mechanical tests were done, when the balancing of the lines required two years in Atlanta.

It is thus quite easy to understand that the new luggage management system could only collapse. In some sense, it collapsed because the project team totally lost of sight the mission of that system, which was just to transport luggage quickly within the airport and at the lowest possible cost, with a strong construction constraint due to the fact that there were only less than 2 years to implement the system. A simple systems architecture analysis would probably concluded that the best solution was not to innovate and to use simply people, tugs and carts, as usual. This case study illustrates thus quite well a very classical project issue where the project system forgets the mission of the product system and replaces it by a purely project-oriented mission20 In Denver’s luggage management system case, “creating the world most innovative luggage management system” indeed became the only objective of the project (which is not a product, but a project system mission). that diverts the project from achieving the product system mission. Any systems architect must thus always have in mind this example in order to avoid the same issue to occur on its working perimeter.

TABLE OF CONTENTS

REFERENCES

[27] de Neufville R., The Baggage System at Denver: Prospects and Lessons, Journal of Air Transport Management, Vol. 1, No. 4, Dec., 229-236, 1994

[28] de Weck O., Strategic Engineering – Designing systems for an uncertain future, MIT, 2006

[56] Lions J.L., Ariane 5 – Flight 501 Failure – Report by the Inquiry Board, ESA, 1996

[72] Schloh M., The Denver International Airport automated baggage handling system, Cal Poly, Feb 16, 1996